REST Consumers

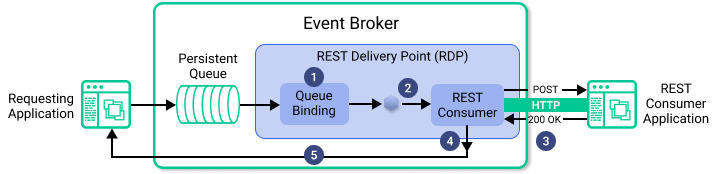

For REST consumers, the Solace event broker establishes an HTTP connection and sends messages using an HTTP POST request as shown in the following diagram.

The REST application acknowledges receipt of the message with a 200 OK HTTP response back to the event broker. The format of the REST POST request that is received and the options on response are outlined in the REST Messaging Protocol, Solace REST HTTP Message Encoding and Solace REST Status Codes.

To receive messages from the event broker, some configuration is required to provide the event broker with details on how to deliver the messages. This configuration is outlined in the following section. This section also provides an outline of request/reply handling for REST consumers and some integration patterns for scaling applications.

Solace Event Broker Configuration Objects

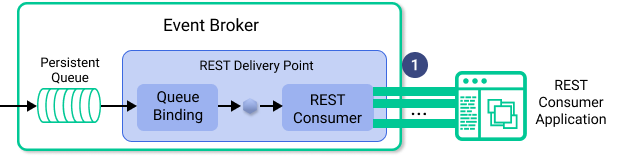

The following diagram outlines the configuration objects that play a role in delivering HTTP messages to a REST consumer. The RDP encapsulates delivery of messages to a set of one or more REST consumer clients. Messages are received at the RDP from persistent message queues based on configured queue bindings. Configured REST consumer objects encapsulate all of the connectivity information for a given REST consumer application. The RDP is then responsible for selecting an appropriate REST consumer and delivering the message.

REST Delivery Point

For REST applications to receive messages, the event broker must establish HTTP connections to the REST consumer applications and delivery messages. The information to establish this connection and to manage message delivery needs to be configured on the event broker. A REST Delivery Point (RDP) is a configuration object that links the message queues that attract messages and REST consumer applications that take delivery of those messages.

Therefore, an RDP object performs the following roles:

- An RDP is configured with bindings to persistent message queues which attract messages to be delivered to REST consumers.

- The RDP schedules newly-arriving messages for delivery over HTTP/REST connections to REST consumers. Traffic management performed by the RDP is discussed in the following sections.

- The REST consumer object handles the connectivity to the REST consumer application. The application’s IP/DNS name and TCP port number, along with other connectivity details, are set in the REST consumer object.

Queue Binding

A queue binding object exists within the scope of an RDP . RDPs can have one or more queue bindings. At least one queue binding is needed for traffic to flow. Queue bindings allow theRDP to bind to a persistent message queue on the event broker and then receive messages.

A POST request target is also configured within a queue binding. The POST request target is used for all messages originating from the physical queue. It is possible to indicate the source of the message to the REST consumer application using a POST request target.

REST Consumer

REST consumer objects establish HTTP connectivity to REST consumer applications that want to receive messages from a Solace+ event broker. Each consumer application is identified by an IP/DNS Name and TCP port number. An event broker will typically have multiple TCP/HTTP connections open to each REST consumer to allow delivery of many messages in parallel. There can be many REST consumers configured in an RDP to allow for a higher rate of message delivery as well as to support fault tolerance.

One key architecture pattern to understand is that all REST consumers within an RDP are considered to be equal destinations for messages. So an incoming message may go out any REST consumer connection within an RDP.

By default, the event broker automatically chooses the IP interface through which outgoing connections from a REST consumer are made. However, if your environment requires a fixed IP interface, it is possible to configure a specific interface for the REST consumer so that the source IP address specified in all outgoing packets will be the same for all connections associated with the REST consumer. A fixed IP interface may be required, for example, if a firewall is used between a client application and the event broker. In this case, the firewall must be configured to permit the event broker’s source IP address through, so an automatically-selected IP interface can be problematic.

Message Delivery Walk Through

The following diagram shows how messages are delivered to REST consumer applications.

- Messages arrive at persistent message queues from an event broker client.

- RDP queue bindings connect the RDP to persistent queues. As each message is received from the persistent message queue through the queue binding, the message is augmented with the POST request target.

- The RDP then converts the message from SMF to a properly formatted REST message, as outlined in REST Messaging Protocol.

- The RDP selects an appropriate outgoing REST consumer and HTTP connection to send the message.

- The RDP sends the message to a connected REST consumer application.

Request / Reply

The Solace event broker also supports a request/reply message exchange pattern with REST consumer applications. The following diagram and steps outline how this works.

- The message is received at an RDP through a persistent queue.

- When the RDP is creating the REST message to send, it looks for a reply-to destination in the received SMF message. If a reply-to destination is present, the RDP enables request/reply processing. The outgoing REST consumer connection is selected in the same way as before.

- The REST consumer application sends the response back to the event broker in the body of an HTTP 200 OK POST response.

- When the RDP receives the response, it converts the response found in the 200 OK HTTP response into an SMF message for internal routing. The destination of the message will be the reply-to destination from the original message.

- The message is routed normally in the event broker and returned to the application that made the request.

REST Consumer Connection Selection

An RDP within the Solace event broker attempts to distribute traffic across outgoing REST consumer connections using the following criteria:

- A REST consumer is selected in a round robin fashion from the group of REST consumers that have at least one available HTTP connection.

- The outgoing HTTP connection used will again be selected in a round robin fashion from the group of available HTTP connections within that REST consumer.

This REST consumer HTTP connections selection process is used to provide better overall performance without concentrating traffic on any particular REST consumer. A REST consumer connection is considered available when it is connected and has no pending HTTP messages. It is therefore available to send a new HTTP POST message.

For example, if five messages need to be simultaneously delivered through an RDP, and there are five REST consumers, it is better to send each message to a separate REST consumer than to send all five messages to the same REST consumer even if that consumer has five available HTTP connections.

Message Sequencing and Redelivery

When a REST consumer application responds to a message with an HTTP error response (for example, something other than 200 OK), the RDP negatively acknowledges this message back to the persistent message queue. Depending on the settings of the persistent message queue, the message may be redelivered to the RDP for processing. Redelivered messages follow the same message processing as new messages.

The number of times a message can be redelivered to the RDP is controlled by the “max-redelivery” property of the message queue. By default this parameter is set for infinite retry, which avoids message loss. However, this persistent queue property can be tuned depending on application-specific needs.

If all message redelivery attempts are exhausted, the message follows normal dead message queue (DMQ) handling. For applications to make use of the DMQs, an appropriate value must be set for the max redelivery count property of persistent queues.

On event broker versions 10.12.0 and later, you can configure a list of 4xx and 5xx HTTP status codes that the event broker treats as message rejections rather than delivery failures. When a "status-code" that has been given the "rejected" property is returned from the REST consumer, no redelivery attempts are made and the message is immediately discarded or moved to the DMQ if one exists and the message is eligible.

Message Sequencing

Typically, there is no guarantee of message ordering for REST consumers. Messages on different HTTP connections can reach the consuming REST application in any order. Additionally, redelivery of messages can result in out-of-order delivery of the redelivered message.

In general, message ordering is not a requirement for REST consuming applications. For applications where this is a strict requirement, an RDP with a single REST consumer and a single outgoing REST HTTP connection will maintain message order at the expense of message throughput.

Connection Handling on Errors

Most often when a REST consuming application fails to process a message, immediate redelivery of this message to the same application will result in a similar failure in processing. Therefore, RDPs implement a hold-down timer for connections that receive an HTTP response other than 200 OK. In these scenarios, the connection with which the POST request was sent is not reused for the “retry delay” period (the default value is three seconds).

While a REST consumer connection is in the hold down state, it will not be scheduled to receive any outgoing POST requests. This has two beneficial effects:

- First, it greatly reduces the error processing load of everything in the network.

- Second, if there are other REST consumer connections available that are successfully receiving POST responses, the RDP will direct the majority of the outgoing messages towards those connections.

Performance Considerations

The Solace event broker must wait for a response before sending the next message because of the blocking nature of the HTTP delivery to the REST consumer. Therefore the performance of a single HTTP connection is always bound by the round-trip time of one message. This time depends on the quality of the network between the event broker and the REST consumer and how quickly the REST consumer can process messages.

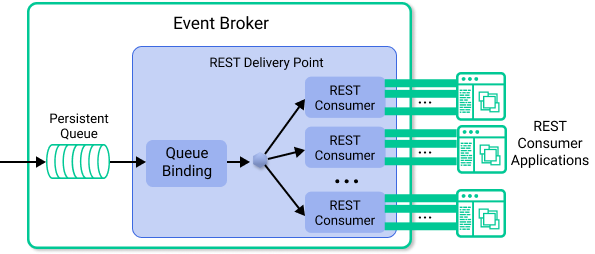

As shown in the diagram below, there are two main options to consider for increasing the overall system performance.

- Increase the number of connections per REST consumer.

- Increase the number of REST consumers used.

Scaling Connections per REST Consumer

When the REST consumer application has ample processing overhead and the performance is simply limited by the network round trip time, you can add additional outgoing HTTP connections as shown in the following figure. This can eliminate the network as the limiting factor in performance.

Scaling REST Consumers

The next most common limiting factor in performance is the REST consumer application itself. Often these applications can be horizontally scaled to allow for more parallel processing of incoming messages. The Solace event broker supports this by allowing RDPs to contain many REST consumers. As shown in the following diagram, message delivery is then shared across REST consumers.

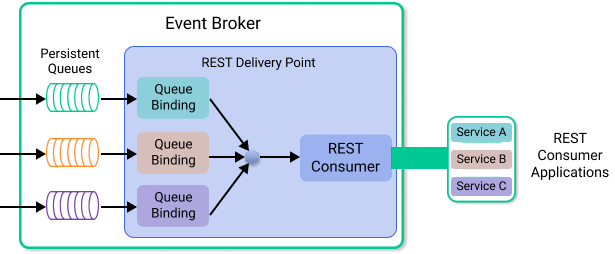

Dynamic Routing of Message Content by REST Consumers

REST consumer applications are often configured to listen for incoming traffic on a low number of input ports. Incoming HTTP traffic is routed to the correct internal service based on the HTTP POST request target or URI associated with the message. As shown in the following figure, this is the most common use case for multiple queue bindings within an RDP.

Messages for Service A can be routed to a single persistent queue. The queue binding within the RDP can associate the correct POST request target so that the REST consumer application can route the message internally to Service A. A similar setup can be used for Services B and C.

This is a common integration pattern and, as shown later, is often used in DataPower integration.