Using an Integrated Load Balancer Solution

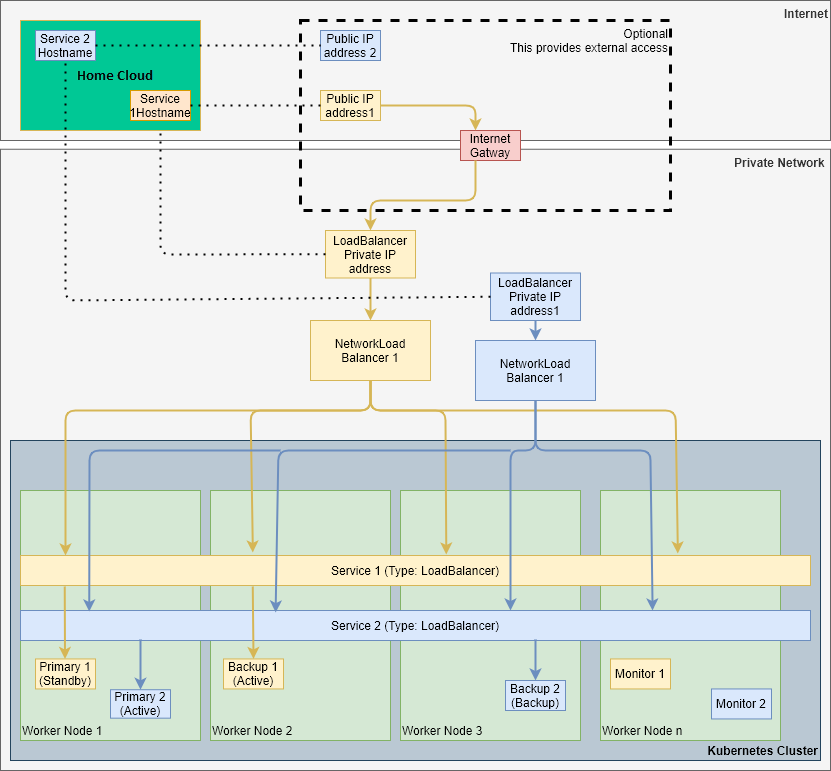

Solace recommends that customers use an load balancer that's integrated with Kubernetes (e.g., MetalLB, VMWare NSX-T) to expose the event broker service within the Kubernetes cluster to external traffic. To support this setup, ensure that the customer determines with their Kubernetes administrator whether the Kubernetes Service of type LoadBalancer is supported and whether any special annotations are needed to create an external network load balancer.

To use an external load balancer, the customer must recognize the following:

- The customer must configure the Mission Control Agent so that it creates a Kubernetes Service of type

LoadBalancer. The configuration automatically attaches the public IP addresses to theLoadBalancersfor event broker services to be accessible from the Internet. The network load balancer takes care of routing requests appropriately within the Kubernetes cluster. - Each event broker service has its own, dedicated network load balancer and that load balancer consumes one IP address. The customer must ensure that you have enough load balancers and external IP addresses for the number of event broker services that the customer wants to expose. In the case where the service is available on the Internet, this would be enough public IP addresses. For example, three load balancers and three public IP addresses are required to expose three event broker services publically over the Internet.

Solace recommends that the customer use a network load balancer with an L4 load balancing strategy that fronts the Kubernetes nodes. The benefits of using an network load balancer is that it exposes a single endpoint that's not impacted if any of the Kubernetes worker nodes go out of service. That network load balancer also ensures that traffic is routed only to worker nodes that are operational.

Solace also recommends setting the load balancer's externalTrafficPolicy to local. The local policy setting preserves the source IP addresses of connecting client applications. This setting allows your event broker services to determine which client is connecting to it.

While we recommend local, Solace also supports the cluster policy if required. The cluster policy can provide faster fail over times for applications when needed, but comes with the following drawbacks:

-

Any node in your cluster can receive traffic and then forward it to the pod hosting the target event broker service. The additional network travel can affect performance.

-

The source IP address for the connecting client becomes unavailable to your target event broker service, as its IP is replaced by that of the forwarding node.

-

Your event broker service will generate additional TLS connection logs because of TCP probing that is inherent in the

clusterpolicy setting.

You can learn more about the external traffic policy settings in the Kubernetes’ documentation.

To expose the cluster, an external IP address is mapped to the load balancer’s private IP address. This mapping is sometimes automatically managed by some integrated load balancer solutions. In addition, the customer is responsible to check whether any special annotations are required to configure network load balancer for their environment.

Public access outside of the customer's private network is optional. If required, an Internet gateway is required to route a public IP address to the appropriate private IP address. If the customer's network blocks external traffic from the Internet, they must whitelist the Solace Home Cloud's IP address. In this case, the customer must provide details (URL, username, and password) of the HTTP/HTTPS proxy server to the Mission Control Agent during deployment.

When you use an integrated load balancer, there are advantages and disadvantages over using NodePort (with an external network load balancer).

Advantages

- Simple usage. The client has a single endpoint to connect to for each service and standard ports and change ports:

- That solution allows the customer to use standard TCP ports because the IP address is dedicated to the event broker service, so collisions of port numbers won't occur. For example, you could use 55443, which is the standard port for SMF-TLS where NodePort with External IP won't permit this.

- The customer can customize the TCP ports as required. NodePort doesn't permit the configuration of TCP ports. What this means is that custom ports you specify when you create a service via the Solace Cloud Console can be used to access the event broker service.

- Easier operation. The load balancer takes of routing traffic to the operational Worker node

Disadvantages

-

An integrated load balancer is typically harder to implement for on-premises locations. For on-premises locations, typically the customer needs to deploy an additional external network balancer and this also requires some network planning.

-

Each event broker service requires a network load balancer and one IP address, which can increase costs if there are multiple event broker services. In addition, for each event broker service that's exposed publically, a public IP addresses is required.

For more information, see Type LoadBalancer in the Kubernetes documentation.

For more information about creating an external load balancer, see Create an External Load Balancer in the Kubernetes documentation.