Configuring Cluster Links with Replication

When you create cluster links to the members of a replication group, the data channels are shared between those two links. This creates a situation where the link settings can conflict with each other. This is explained in detail below.

Shared Data Channels

As described in Configuring Cluster Links, a DMR cluster link is composed of:

- one control channel

- one client profile

- one data channel per Message VPN. A data channel is made up of a bridge and a queue.

If a cluster link goes to a node that is part of a replication group, then that link's data channels are shared with the link to the other node of the replication group. Because of this, the pair of links share a single bridge per Message VPN, and single link queue per Message VPN.

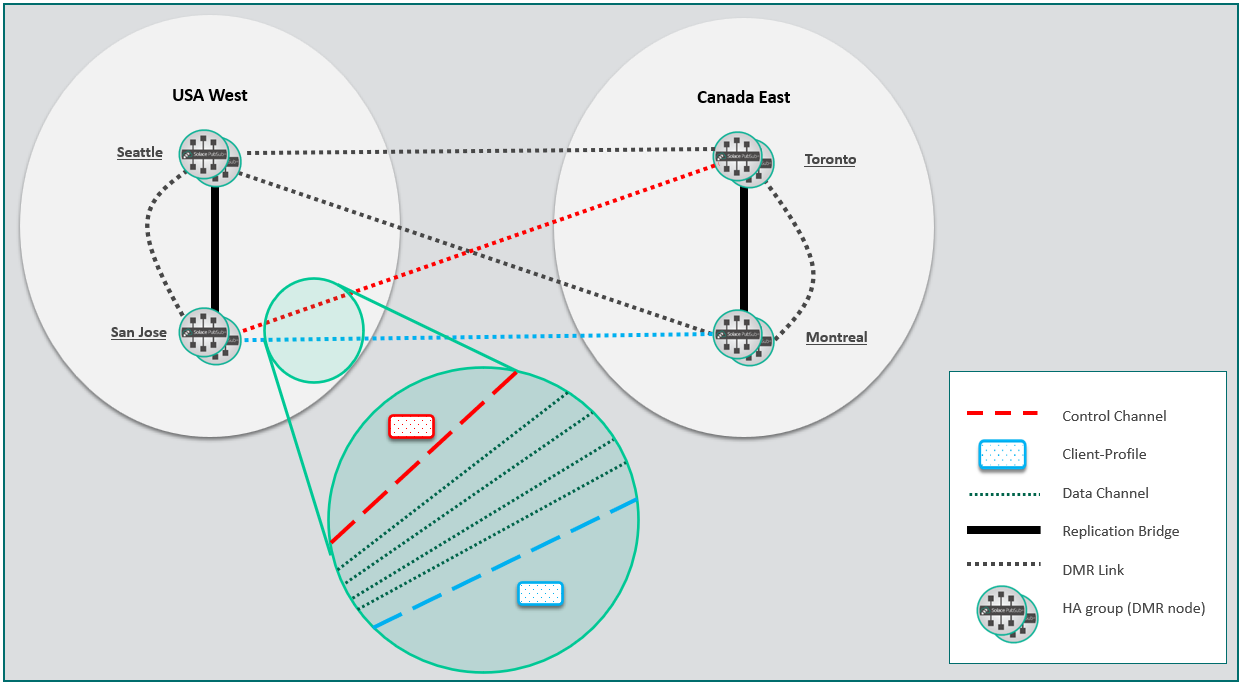

Consider the example shown in the following diagram:

- Each region (depicted by a gray oval) is a separate DMR cluster.

- In each region there are two nodes, each of which consists of one High-Availability (HA) pair.

- Each node in the network is connected to every other node by a DMR link:

- Nodes between clusters (regions, in this example) are connected by external DMR links

- Nodes within the same cluster (for example, Seattle and San Jose) are connected by internal DMR links.

- The cluster links from San Jose to Toronto (red) and Montreal (blue) share the same set of data channels (green; one per message VPN), but each link has its own control channel and client profile. This is illustrated in the green detail view.

Configuring Links with Shared Data Channels

Because a shared data channel is not directly configured, but rather is constructed based on the settings in its parent link, a shared data channel must be set up based on the combined configurations of the two parent links. In many cases the two configurations can be combined in a compatible manner. However, this not true for all settings.

For release 10.8.1 and later, if there is a conflict between the two parent links of a shared data channel, a parent link is operationally UP if the other parent link is administratively disabled. The shared data channel adopts the settings of the enabled link and is operationally UP. Since the shared data channel remains up, you can perform maintenance on the disabled link as required to match its settings and service continues without interruption. However, if both parent links are administratively enabled and there is a conflict between the two parent links of a shared data channel, both parent links and the shared channel are operationally DOWN.

For releases 10.8.0 and earlier, if there is a conflict between the two parent links of a shared data channel, both parent links and the shared data channel are operationally DOWN, regardless of the administrative status of the link.

When the parent links are operationally DOWN due to a conflicting attribute, you can use the show cluster <cluster-name-pattern> link * command where you can see the conflicting attribute in the Reason field.

The following table details the settings where conflicts can occur:

| Link Setting | Details |

|---|---|

authenticationScheme

|

The bridge as a whole must have a single authentication scheme. That is, both links must use the same authentication scheme. |

initiator

|

The initiator must be consistent between both links, so that initiation happens the same way regardless of which DR mate is active. |

span

|

The topology relationship (internalorexternal) for both links between a node and a remote DR-pair must be the same. |

|

|

These values are associated with the single shared queue, and therefore must be the same for both links. |

|

|

This value is associated with the single shared queue; this setting must be the same for both links. |